Metrics for the Tabular Classification model with GUI

Metrics for the Tabular Classification model with GUI#

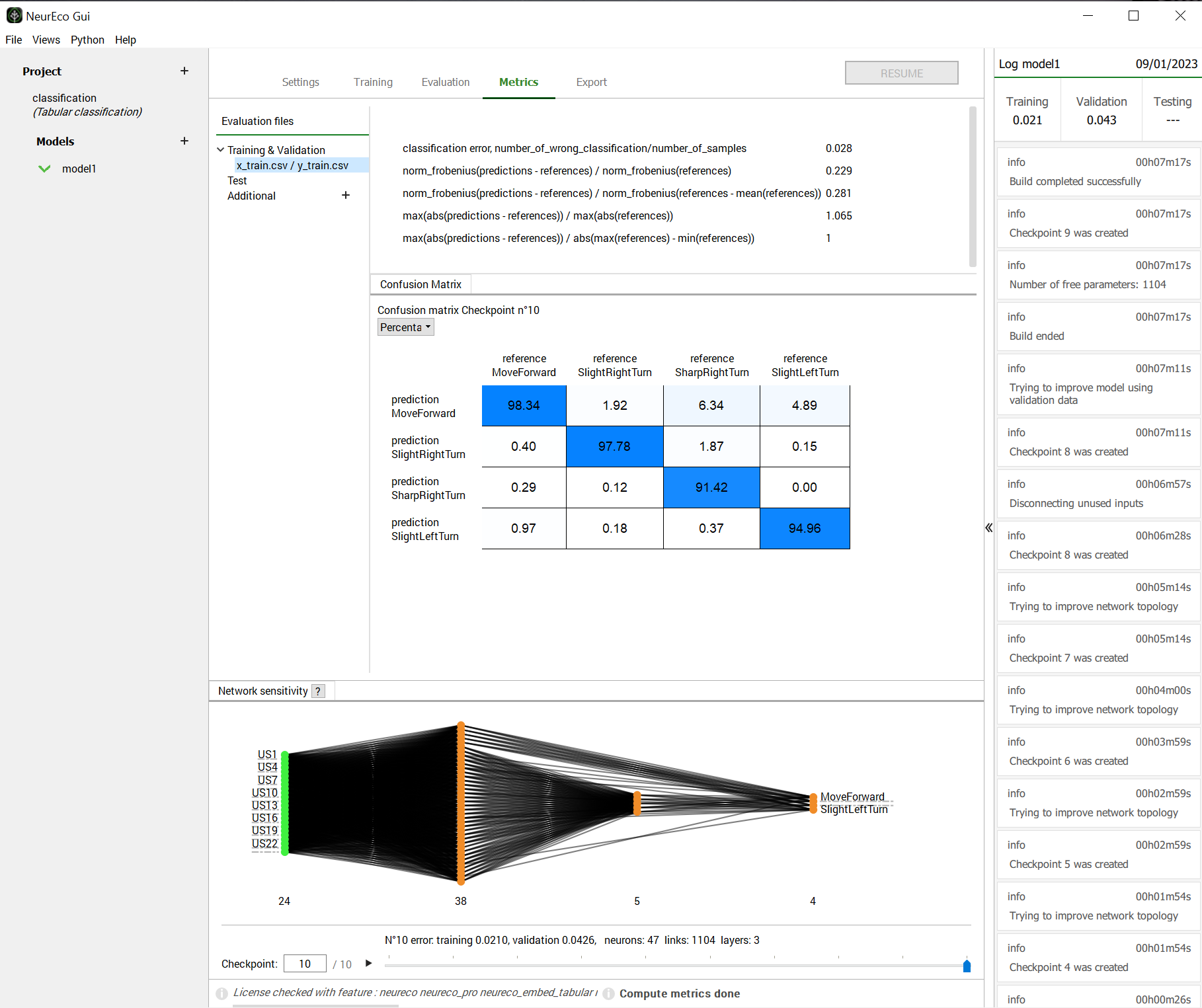

The Metrics tab calculates a set of metrics on the provided dataset.

Metrics, provided for Classification are:

\[classification error = \frac{number\_of\_wrong\_classification}{number\_of\_samples}\]

\[\frac{ \|prediction - reference\|_{fro}}{\|reference\|_{fro}}\]

\[\frac{ \|prediction - reference\|_{fro}}{\|(reference - mean(reference))\|_{fro}}\]

\[\frac{max(|prediction - reference|)}{max(|reference|)}\]

\[\frac{max(|prediction - reference|)}{max(|reference|) - min(|reference|)}\]

Switch to the Metrics tab

To calculate metrics, click on the dataset in the Evaluation files section. Use Aditional + to add the datasets.

The results are displayed, and the Metrics tab provides also a Confusion Matrix for the selected dataset.

An example of a result looks as follows:

GUI operations: metrics evaluation for Classification#

Note

By default, the evaluation of metrics is performed with the last model available in the checkpoint.

Use the checkpoint slider in the bottom to choose any other available model and get its metrics.